Ever since Apple announced the new child safety feature on August 6 on the iOS 15 update, users worldwide are worried about iOS 15 privacy concerns. I have seen that people are asking, ‘Is Apple going to scan my photos’? Is Apple going to scan photos’ or ‘Is iPhone safe for iOS 15 privacy’, and more regarding the iOS 15 photo scanning system.

With the help of the new iOS 15 photo scanning feature, Apple will child abuse support groups to save children from child trafficking and pornography. The new iOS 15 algo will scan images on iPhones, iPads, Macs, Apple Watches, iMessage, and iCloud to track any images under Child Sexual Abuse Material or CSAM.

See More: How To Use iCloud Private Relay For Safe Browsing in iOS 15

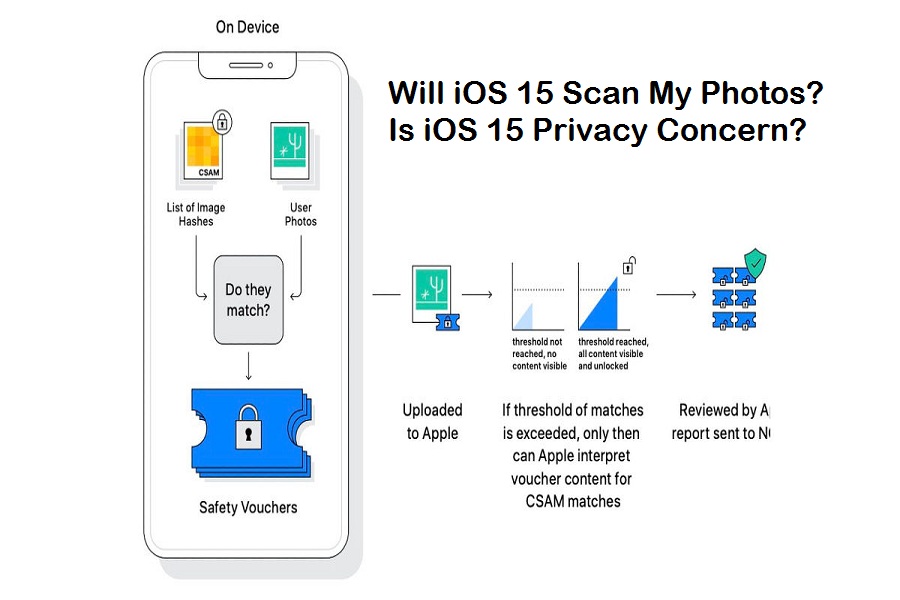

Does that mean Apple is going to scan my every private photo? Not only you, but this question is also popping up on everyone’s mind. According to Apple, the company will use images from Child Sexual Abuse Material, or CSAM, to only scan images matches under the CSAM’s images.

“CSAM Detection enables Apple to accurately identify and report iCloud users who store known Child Sexual Abuse Material (CSAM) in their iCloud Photos accounts. Apple servers flag accounts exceeding a threshold number of images that match a known database of CSAM image hashes so that Apple can provide relevant information to the National Center for Missing and Exploited Children (NCMEC),” the company said in a technical document. They also added, “The threshold is selected to provide an extremely low (1 in 1 trillion) probability of incorrectly flagging a given account.”

As we know that Apple also takes care of users’ privacy, this time, the company is not compromising its user’s private data as they can only track images that come under CSAM’s category.

Once the picture gets scanned under CSAM’s category, Apple will confirm it by human verification from the device owner before submitting your number to the National Center for Missing and Exploited Children (NCMEC) department.

Apple’s new iOS 15 Scanning Photo will follow in two categories: One feature will scan iCloud images, and another scanner will scan all iMessage images sent or received by child account. If they found any sexually explicit, they will warn. Even after the warning, if the child views or sends the image, the company will directly alert their parent. This will be applied to a child age 12 or younger than 12.

Once the news reached WhatsApp CEO Will Cathcart, he tweeted that “This is an Apple built and operated surveillance system that could very easily be used to scan private content for anything they or a government decides it wants to control. Countries where iPhones are sold will have different definitions on what is acceptable,”

He also added more “Instead of focusing on making it easy for people to report content that’s shared with them, Apple has built software that can scan all the private photos on your phone — even photos you haven’t shared with anyone. That’s not privacy.”

How will Apple judge family photos of kids playing with bubbles in the bath? How will Apple monitor bathing photos of kids? What do you think about it as an Apple user? Would you mind sharing your feedback in the comment box?

Many popular faces criticized this feature. Let’s check a look at them.